Google Test Automation Conference 2015

5 minutes readI had the honor to be invited to GTAC 2015 in Boston. It was my first visit to Boston and I have to admit it is a real beauty. I arrived one day before GTAC started and had the chance to walk through this tremendous city. It is a great feeling to sit in the morning sun on the venerable Harvard campus and watch how the future elite trots to their lectures.

Thanks to Air Berlin, I also had to spend some time at Primark as they forget my luggage in Berlin … But this is a different story.

upday@Google - My favourite talks

Let’s get down to business. There were several great talks. The scope of topics ranged from frameworks for mobile testing through test processes up to massive test racks for Google Chromecast Sticks and robots with high speed cameras. The following selection of talks does not claim to be complete. But this were the sessions that impressed me the most.

The Uber Challenge of Cross-Application/Cross-Device Testing

Uber presented a testing-framework that they implemented to allow ui based testing of interconnected (and cross platform) apps like their driver and passenger app. If you are in the same situation and try to automate a test that needs communication between two apps (messenger, games, etc) give it try!

Robot Assisted Test Automation

Fancy robot hands and fingers combined with a high speed camera that allow reproducible tests on every touchscreen. There main purpose is to test the accuracy and speed of displays and the devices in general. But there are so cool, I would love to have one of these things at work.

Chromecast Test Automation

Did you ever asked yourself how Google tests hardware like the chromecasts? Well this talk gives you the answer. In a Google way - means in a massive scale with a lot of intelligence behind it.

Your Tests Aren’t Flaky

I’m pretty sure you know sentences like this: “Ahh - your test is just flaky, just re-run it. If it is green at the second run, everything is fine!” Well this talk from Alister Scott tells you a different story. Something like flaky tests doesn’t exist - if your test results are non-deterministic, it could actually be the fault of the system under test. If you want more after watching his talls - he has a highly recommendable blog (watirmelon.com) that gives a lot tips about test automation on general.

Large-Scale Automated Visual Testing

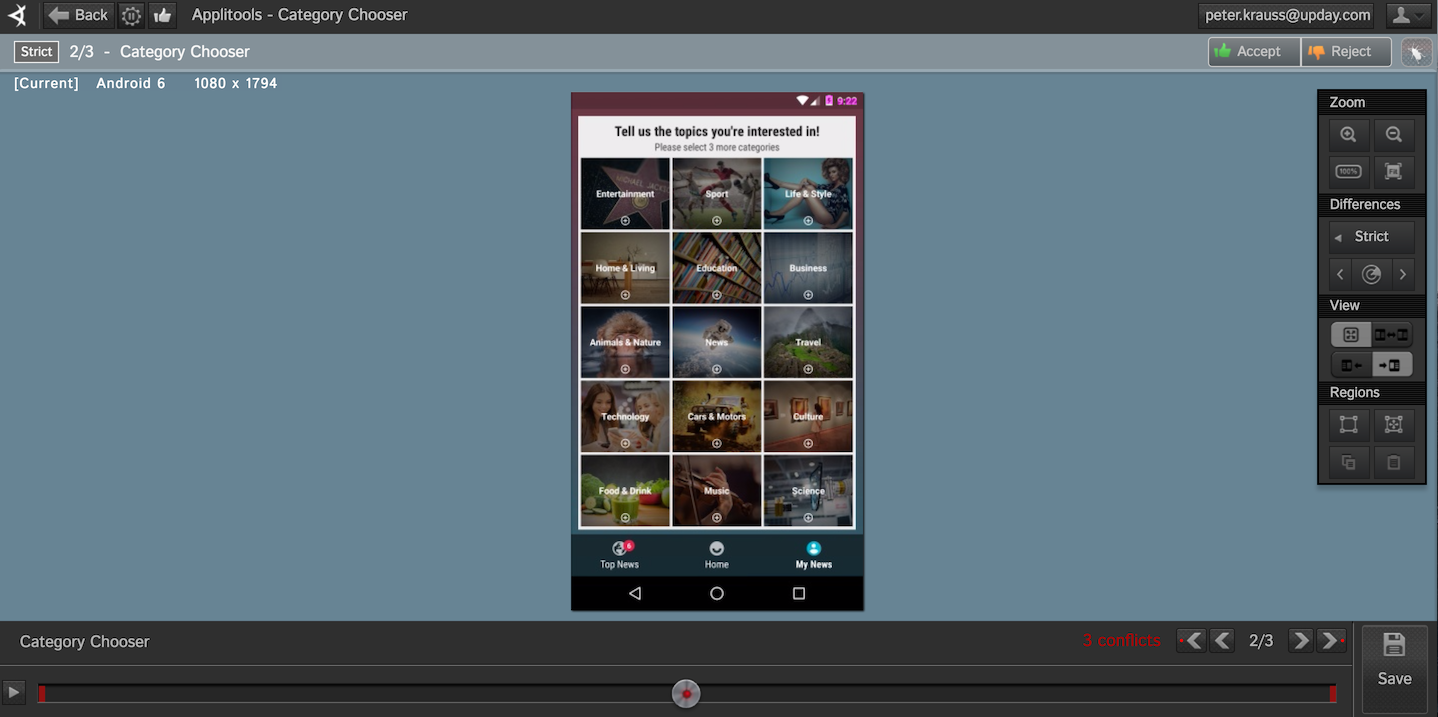

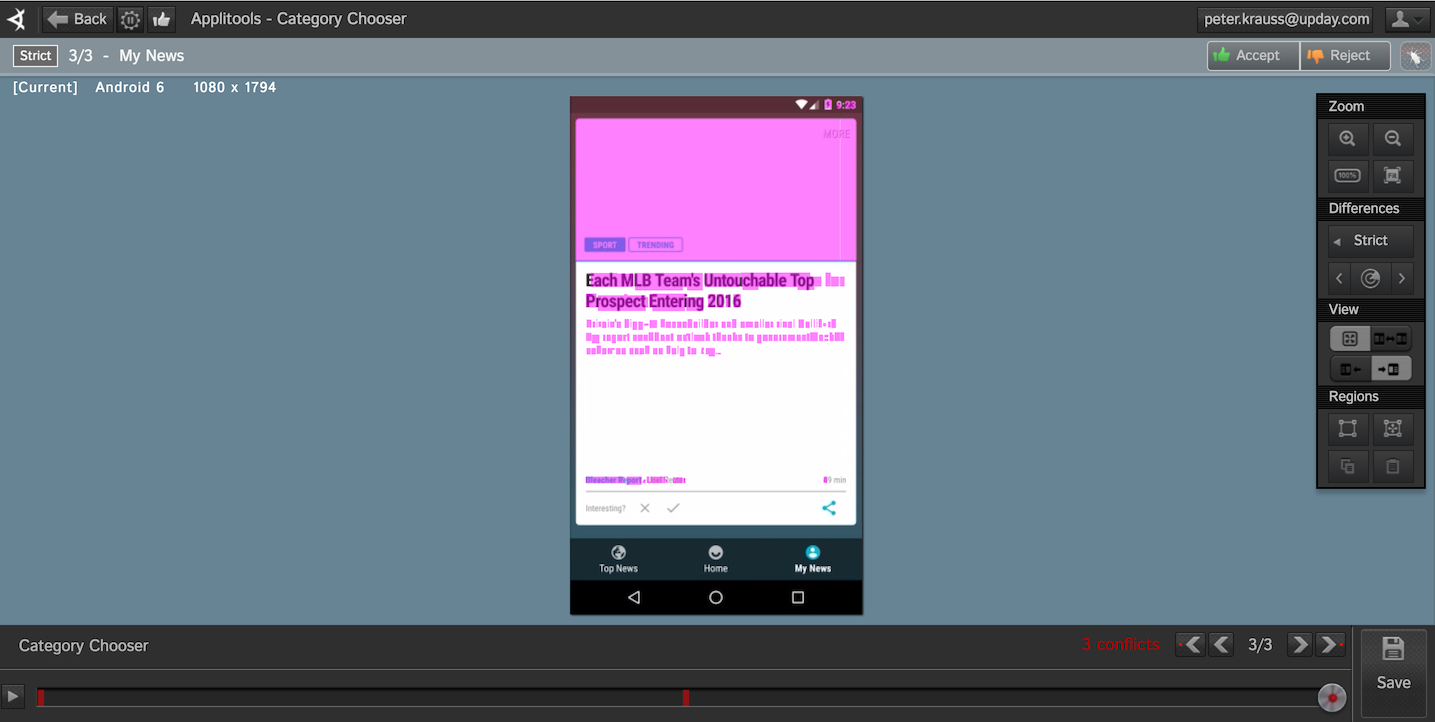

The grande final from Day 1 was presented by Adam Carmi from Applitools. He showed us Applitools Eyes - a framework for visual testing with massive intelligence in the background. There is a integration into nearly all common used web and mobile testing frameworks to upload screenshots to Applitools. Once uploaded - one of the screens are declared as a “golden master” and all successive uploads of this test step are compared with this master. Maybe you aren’t impressed now - but actually you should, because this isn’t just a simple pixel perfect comparison. It allows e.g. to compare just the structure of the mobile/web app without consideration of the content. Also the resolution doesn’t matter - so it is possible to compare screens from a tablet with one from a phone. We are evaluating Applitools in the moment for our app and are pretty impressed about the capabilities.

Las Vegas in Boston

As an entertaining evening programm Google invited us to gamble with funny money at Black Jack, roulette, poker and craps tables - May the odds be always in your favor!!!

Day 2

Hands Off Regression Testing

The guys from Twitter presented diffy what they describe on there github page, as: “Diffy behaves as a proxy and multicasts whatever requests it receives to each of the running instances. It then compares the responses, and reports any regressions that may surface from those comparisons. The premise for Diffy is that if two implementations of the service return “similar” responses for a sufficiently large and diverse set of requests, then the two implementations can be treated as equivalent and the newer implementation is regression-free.” They use it in production and after watching their presentation you might also consider using it, to check if your newly deployed micro-service delivers the expected results.

Mock the Internet

LinkedIn presented flashback, which is a mocking system that helps to mock outbound traffic for integration tests. Next to the web layer it also can be used in mobile apps. They promised to open source it - we would love to use it for mocking all our requests from app to backend. In the meantime we are using Wiremock to create our mocks.

Summary

It was an awesome experience to listen to the creme de la creme of test engineering. Also the chance to visit Boston and the Google Cambridge office was simply astonishing. So if you ever get the chance to visit a GTAC do not hesitate to take it!!! Feel free to contact me if you have any further questions or remarks.